San Francisco

On New Year's Eve I was at a murder mystery party in Brooklyn. On January 1st I was in an Uber on my way to SFO.

My Airbnb had a furnace in the hallway and hot water that lasted a few minutes at a time. When the month was up, instead of renewing, I found a hacker house on Google Maps, got a tour, and signed up the next day.

Picture a gorgeous San Francisco Victorian. Big bay windows, high ceilings, light everywhere. A communal table where on any given Tuesday you'd find someone training a model, someone whiteboarding a difficult problem, someone soldering a PCB.

Snapshot of my SF year: Pickleball on lunch breaks. Working out of a new co-working space every day. Weekends at hackathons, either competing or coworking with vibes. I worked out of the offices of the teams building my favorite products, like Replicate in the Mission — lunch on Wednesdays for devs to come hang out.

Running Events

Here's a hack for running events in San Francisco, if you ever need one.

Step 1: co-run an event with your hacker house. About 1,500 people get added to your personal Luma list. Step 2: join a coworking space where members can book rooms automatically through the Luma plugin. Step 3: run events. Your invite list is growing and you have free space.

Highlight Reel

AI Build Jam at The Commons — startup ideas and field problems, testable prototypes by end of day. Run simultaneously in SF and Montreal.

Rachel and I dropped into Montreal remotely for the team pic

The Solid hackathon was the first time anyone had placed an Ikea catering order at the SF Ikea. We carted trays across the street, ran health challenges in the raffle (one ticket for a sit-to-stand with no hands, two for submitting on time), and followed it up with a ProductHunt launch party on the top floor — cereal bar, live demos.

Solid "Vibe Labs" volunteers (or, friends from Boston)

Demoing the " Vibe Labs Gallery " - a gallery and project submission page created for the Solid hackathon, generated with Solid

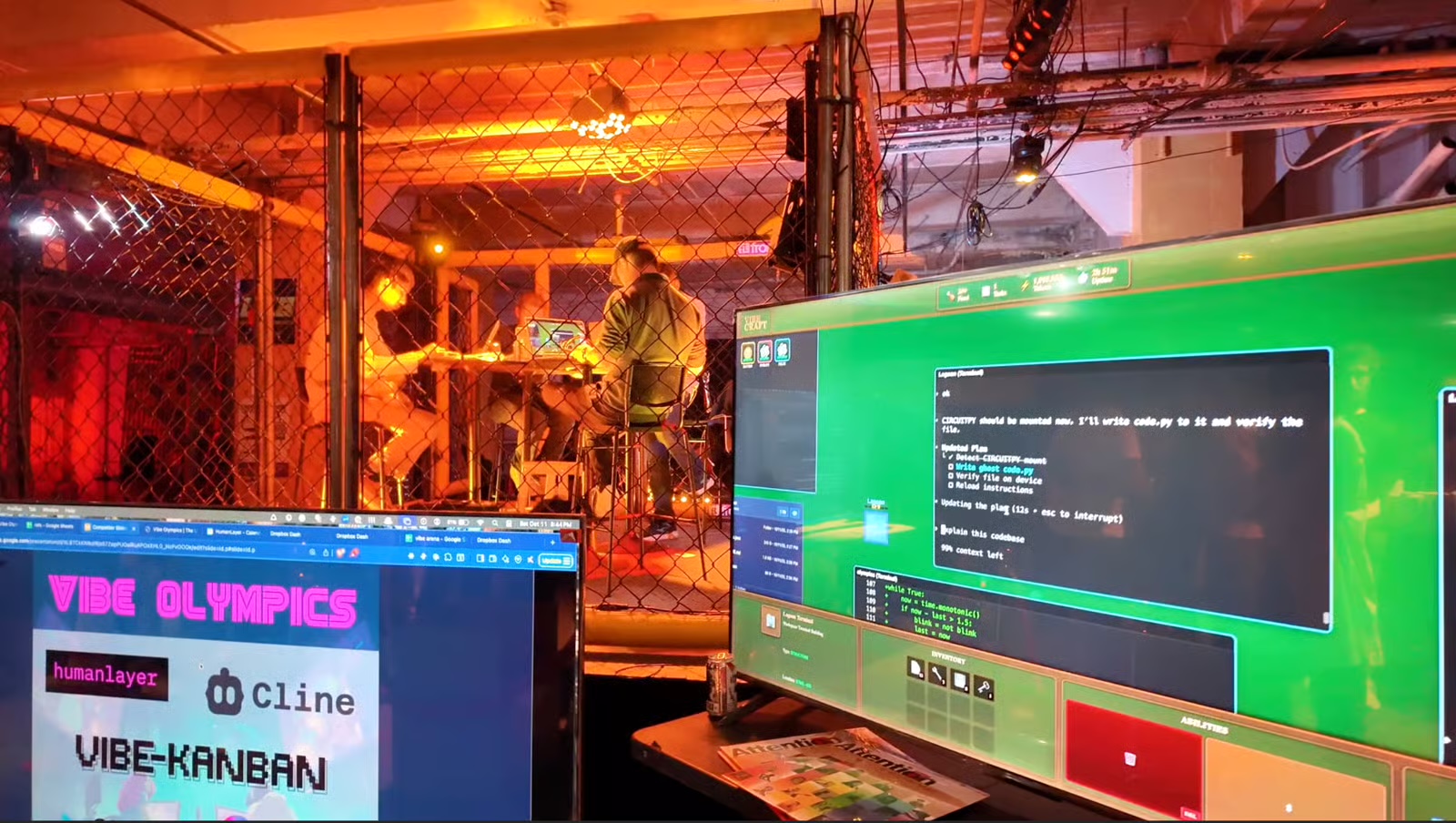

The Vibe Olympics started as a throwaway idea. Beta tested in the hacker house, a month later it was in full production in Frontier Tower's basement robot fighting ring.

Building AI Agents Workshop

Sponsored by Hugging Face, Rime, and Codapt. 150 people at Frontier Tower.

The pitch: Building AI agents is fun, easy, and can be free. Don't pay for [insert agent product here]. We'll set up a local model and write some Python to automate the things that zap your brainpower.

The workshop took participants from zero to multi-agent systems in one afternoon: basic agent → custom tools → MCP connections → multi-service integration (Notion + image generation) → multi-agent teams with specialized roles → Gradio web interface → web navigation with Selenium. All using smolagents, all open source.

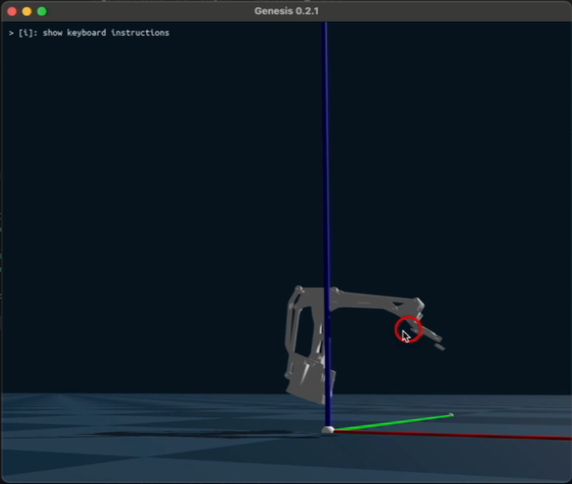

Then we hooked an agent up to a robot arm and had it mix mocktails. The drink_making_agent.py was the highlight of the repo and the afternoon.

The room spanned complete beginners to senior engineers, and watching them pair up and help each other was the best part. Hugging Face launched a Women on Hugging Face community afterward and invited our participants as founding members. Rime gave everyone $100 in credits.

Workshop code: github.com/ltejedor/building-ai-agents

CVPR AI Art Gallery

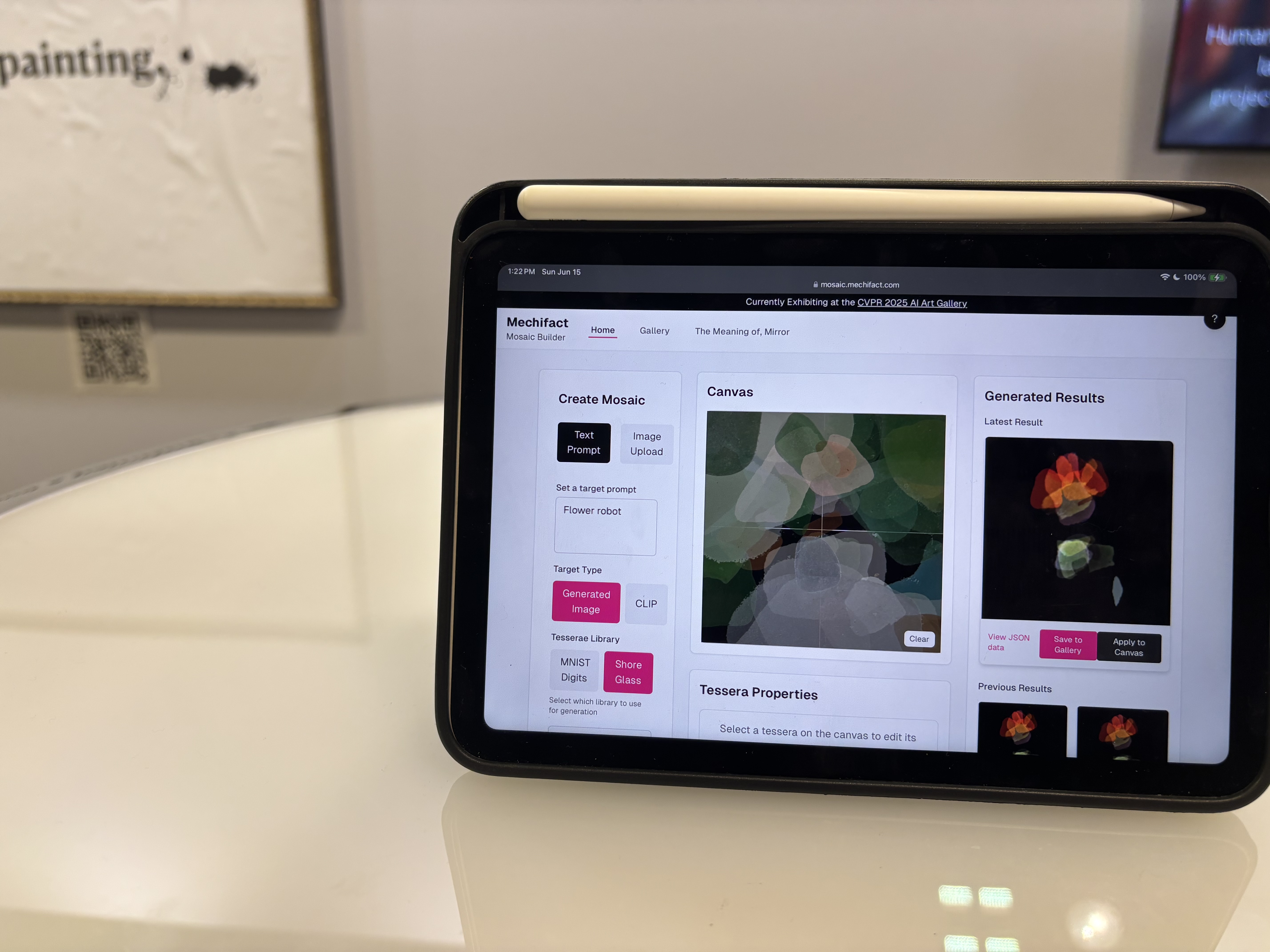

In 2025, my work was featured at the CVPR AI Art Gallery. The piece was The Meaning of, Mirror, a self-portrait composed from optimized image brushes.

"The Meaning of, Mirror" self portrait on the right

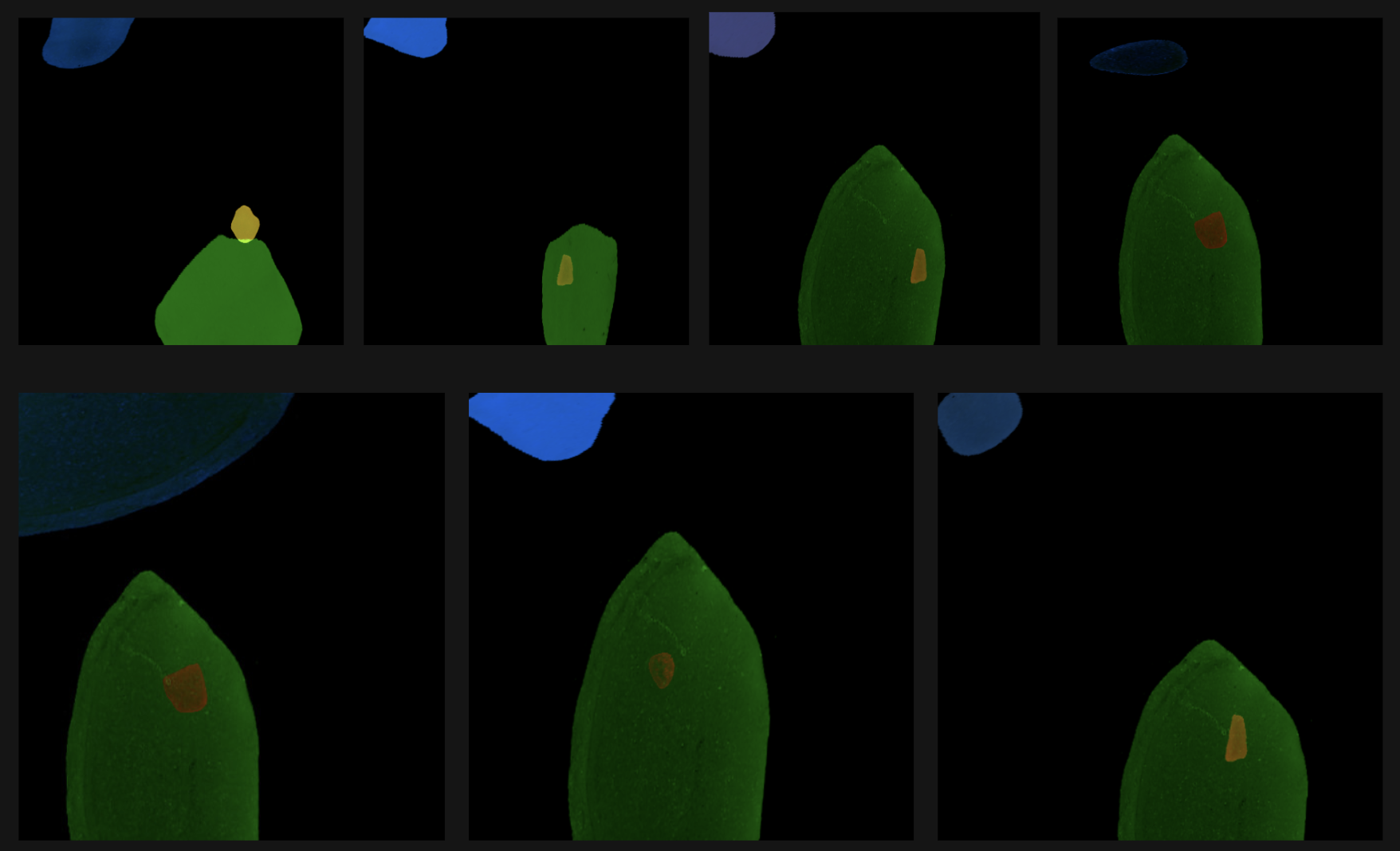

Most generative AI works at the pixel level — diffusion models conjuring images from noise. I wanted something different. Instead of generating pixels, what if the system placed real images — photographs, textures, organic forms — as "brushes" on a canvas, and used gradient descent to optimize their arrangement?

Think of it like this. You have a box of transparent photographs: leaves, tree bark, light through water. You scatter them on a table. Then slowly you start sliding them around, overlapping them, rotating them, adjusting their opacity. With enough patience, a face emerges from the leaves. That's exactly what the system does, except the patience is mathematical — neural visual grammars and dual CLIP-based encoders minimize the perceptual distance between the current arrangement and the target, iterating through gradient descent, optimizing each brush's position, scale, color, and rotation until a portrait resolves.

Jellyfish generated by visitors at the CVPR gallery

The moon breaking apart — another visitor creation using the interactive Mosaic Builder

I also built an interactive version so CVPR attendees could create their own work with the same system — choosing brushes, watching the optimization run, stepping in to adjust, stepping back to let it continue.

→ Deep dive into the technical details behind the piece

Embodied Agents

Projects this year that explored embodied AI — AI models operating in physical space.

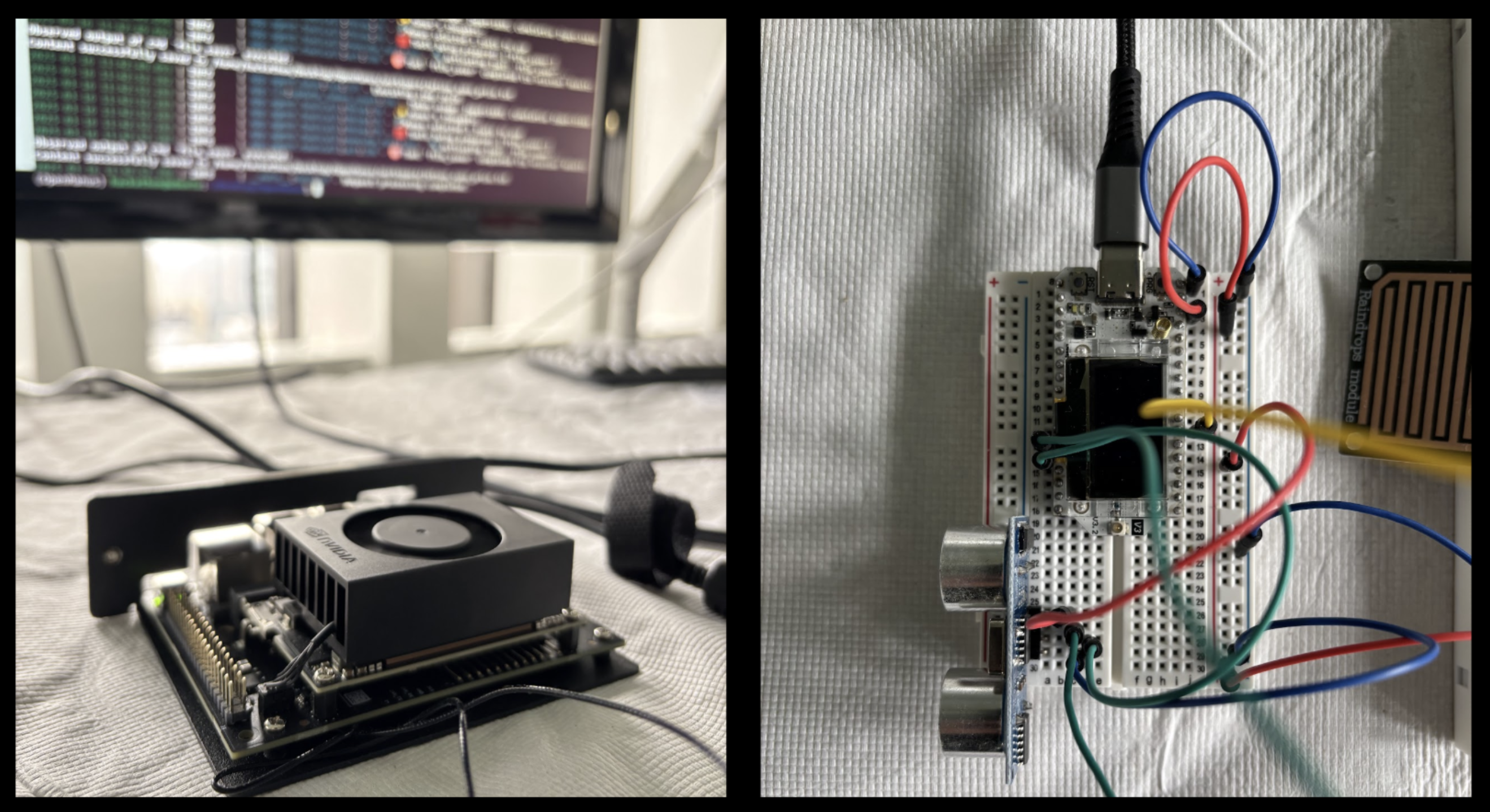

Offline agents for disaster response

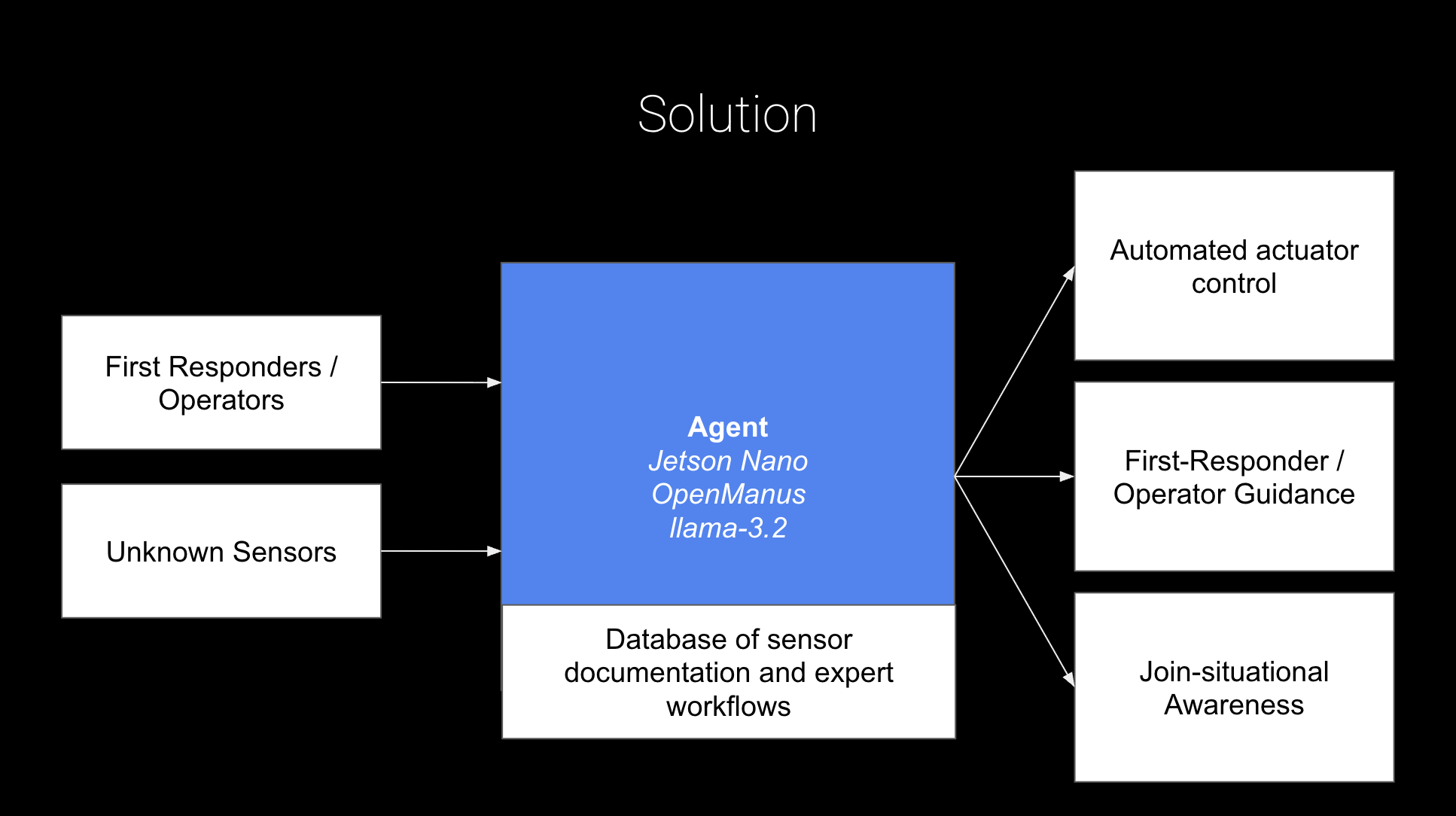

In a disconnected disaster zone, there are sensors everywhere — temperature, humidity, sonar, motion — but nobody has time to configure integrations when the situation is urgent. Can AI agents auto-discover and connect to hardware with zero setup?

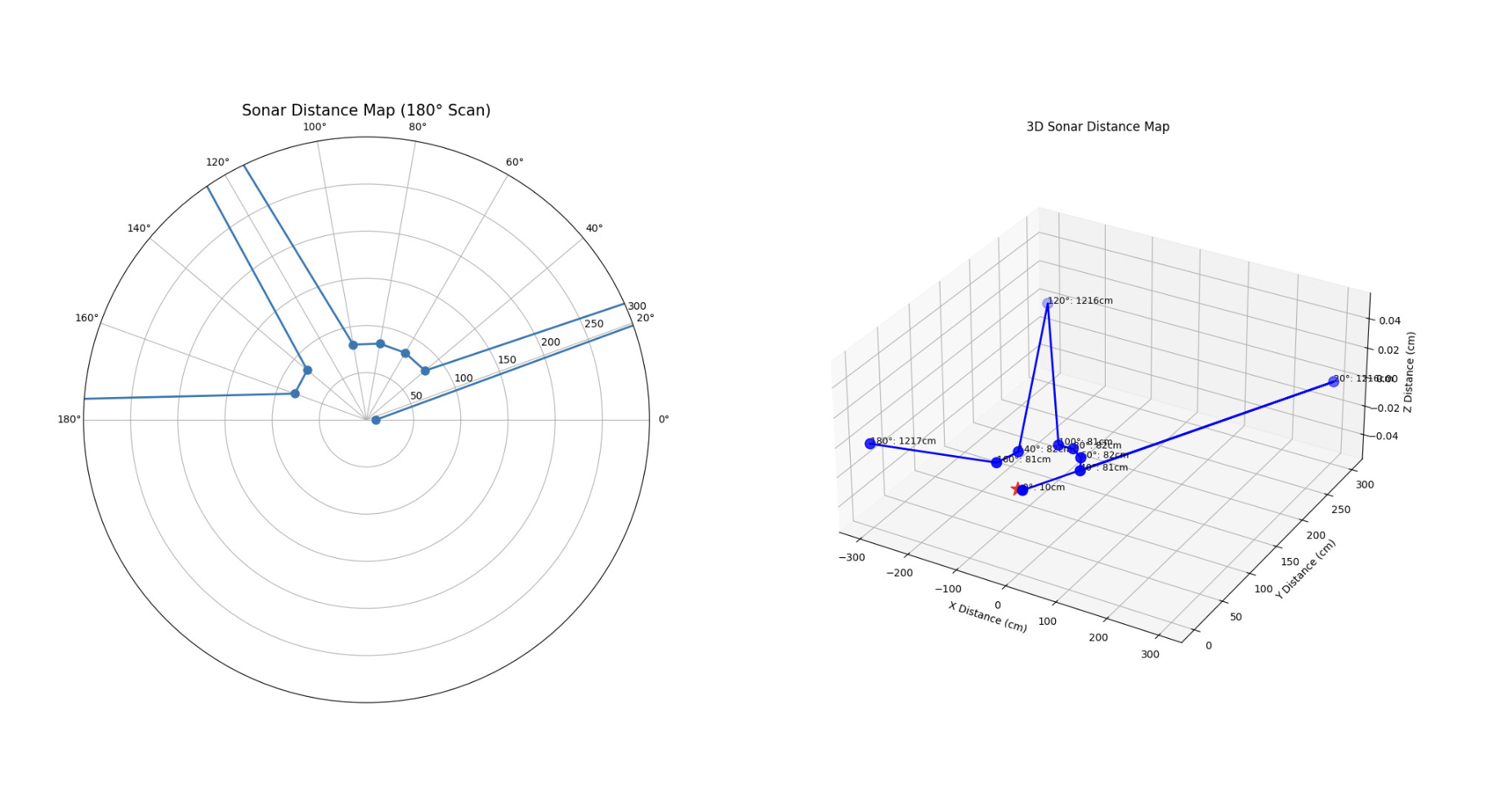

What I built: Jetson Nano as the brain, ESP-32s wired to ultrasonic and raindrop sensors, MCP for standardized sensor discovery, and a fork of OpenManus running entirely offline. Plug in a new sensor, the agent discovers it, auto-generates an MCP tool definition, and starts visualizing the room autonomously.

Visualization of the room, created offline and autonomously by Embodied Open Manus with previously unknown sensors

Code: github.com/ltejedor/embodied-openmanus

Training robot designs with physics

I explored whether an LLM could learn to design robots purely through physics simulation — no examples of good designs, just trial and error.

I fine-tuned DeepSeek-R1 with LoRA adapters to generate robot designs in SDF, then piped them straight into the Gazebo physics simulator. The simulator was the only teacher. If the design didn't collapse or error out, +1. If it did, -1. Feed that reward back through GRPO, let the model try again. The whole loop ran on Strong Compute's distributed infrastructure using FSDP.

The key insight: I never defined what a "good" robot looks like. I just asked whether each design could survive contact with physics.

Code: github.com/ltejedor/deepseek-training-with-simulation

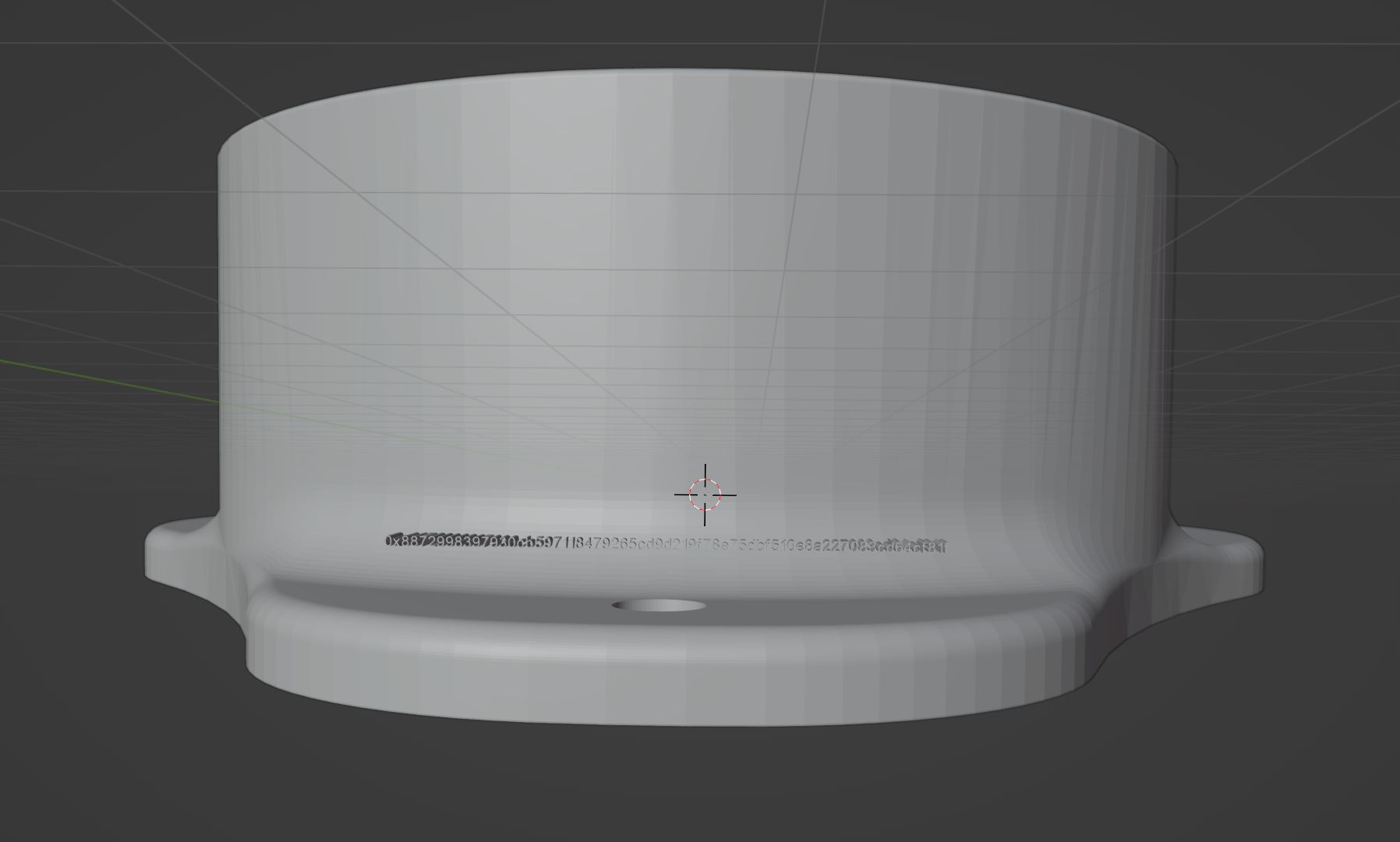

Decentralized identity for robot swarms

What happens when you have thousands of robots accomplishing something together? How do you track them? Distribute training across them?

I built a decentralized identity and training system that unifies physical robots and their simulated digital twins. Register a robot embodiment class on the Arbitrum blockchain. The system auto-generates a digital twin using Roboforge (CAD to URDF conversion) for simulation in the Genesis physics engine. Register a physical instance, and the blockchain transaction hash gets physically embedded into the 3D model using PyVista before printing — embossing the robot's on-chain identity into its body.

Code: github.com/ltejedor/sepiol_storage

30 Days of Agents

In May, I posted on Bluesky: "hey #buildinpublic, what are you using ai agents for rn?" Crickets. So I said: really, no one? alright, let's do this. For the next 30 days, a new AI agent workflow, every day, open source.

The highlights: a WhatsApp bot that sent haunted glitchy messages in group chats. The first FigJam MCP server.

The FigJam MCP server in action — an agent creating and manipulating a FigJam board

A proof-of-work system for AI agents with knowledge graph visualizations. A self-assembling agent that scours ML leaderboards to find the best model for each task. A 3D environment — inspired by Sonic's Chao Garden — where you can walk around and interact with AI agents in real space, interrupt their runs with live feedback, and watch them work. That last one won Rime's prize at the MCP and A2A Hackathon.

Mechifact (from days 18-19) — tracing agent steps, tool calls, and token usage through knowledge graphs and timelines

Site: agents.mechifact.com | Code: github.com/ltejedor/agents

→ Tutorial: building on what I learned during the challenge

Thanks to Megha, Daniela, Rachel, Trevor, Riley, Sahas, Katie, the TTB cohort, and many others for an inspiring 2025.